Free-Hand 3D ManipulationPermalink

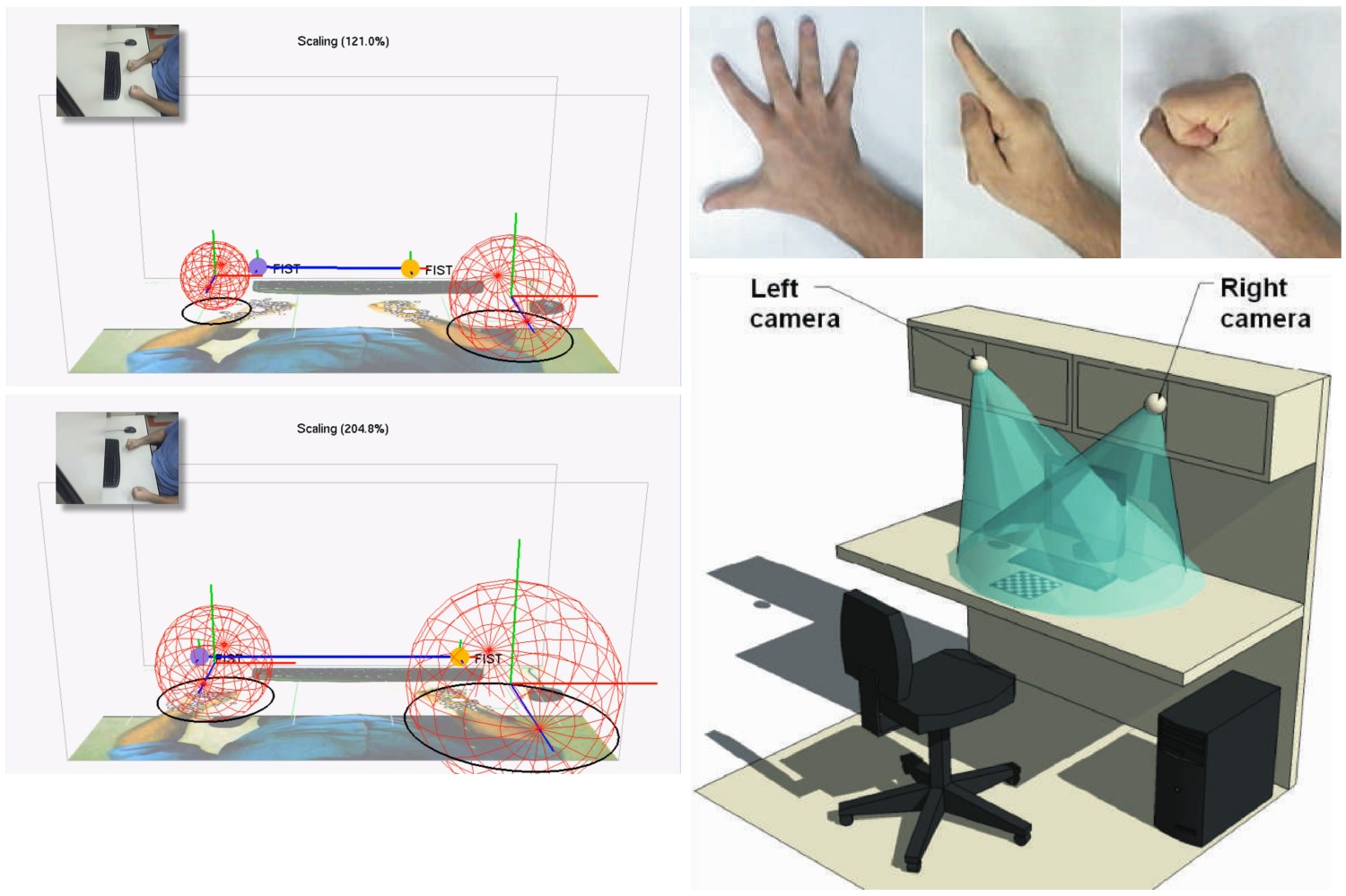

This project was concerned with developing a prototype of an interactive Mixed Reality application for direct spatial manipulation, based on the vision-based recognition of uninstrumented hands. The prototype dynamically integrated both of the user’s hands into the virtual 3D/4D environment, effectively creating a logical 2 × 3 DoF manipulation device. In conjuction with hand gestures, this afforded a number of fundamental 3D manipulation operations like select, deselect, translate, rotate, and scale.

Direct 3D manipulation using vision-based recognition of uninstrumented hands.

Direct 3D manipulation using vision-based recognition of uninstrumented hands.

Evaluation Study (Quantitative)Permalink

Using the system described, I measured tracking-related latencies from 7 to 30ms with just one hand tracked (i.e. with two trackers active), and up to 60ms with both hands tracked (i.e. with all four trackers active). Interaction frame rates ranged from 8 to 15 fps (frames per second).

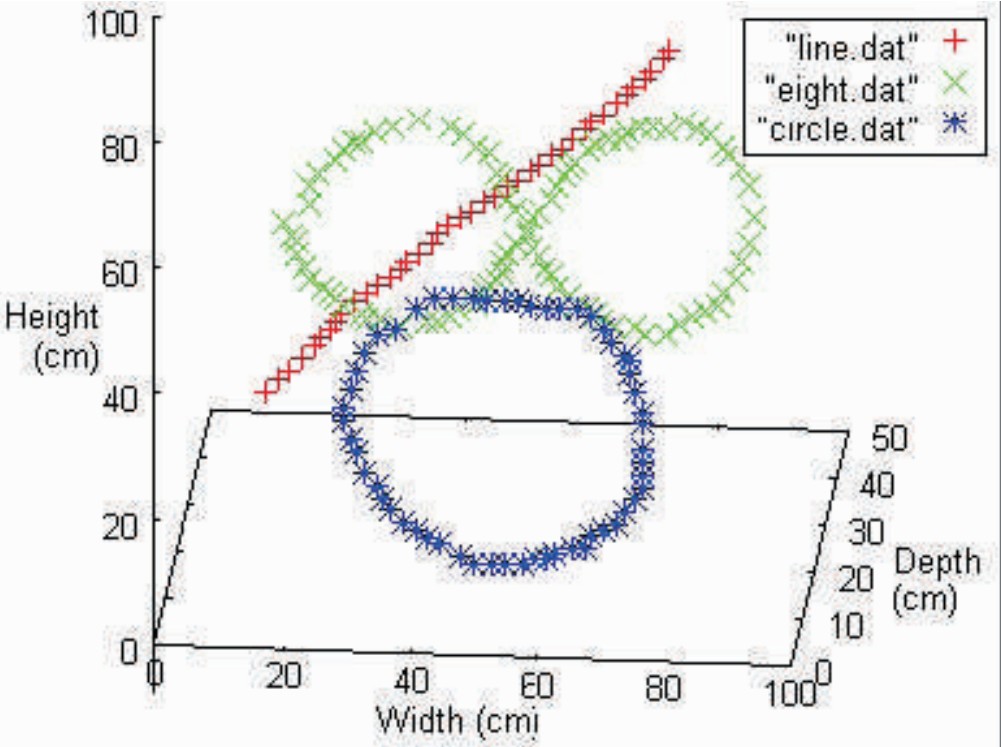

Three-dimensional plot of estimated hand positions, obtained by tracing a line, a circle, and the figure "eight" in space.

Three-dimensional plot of estimated hand positions, obtained by tracing a line, a circle, and the figure "eight" in space.

I also quantitatively assessed estimation accuracy for a hand’s position, as shown in the figure above, from which one can visually deduce the amount of noise present in tracked positions.

S. Kolarić, A. Raposo, M. Gattass: Direct 3D Manipulation Using Vision-Based Recognition of Uninstrumented Hands, full paper, X Symposium on Virtual and Augmented Reality (SVR 2008), Conference Proceedings, João Pessoa, PB, Brazil, p.212-220 (2008). download at CiteSeerX